ECCV 2024

AnyHome: Open-Vocabulary Generation of Structured and Textured 3D Homes

1Brown University

2Shenzhen College of International Education

*[Equal Contribution]

†[Corresponding Author]

Abstract

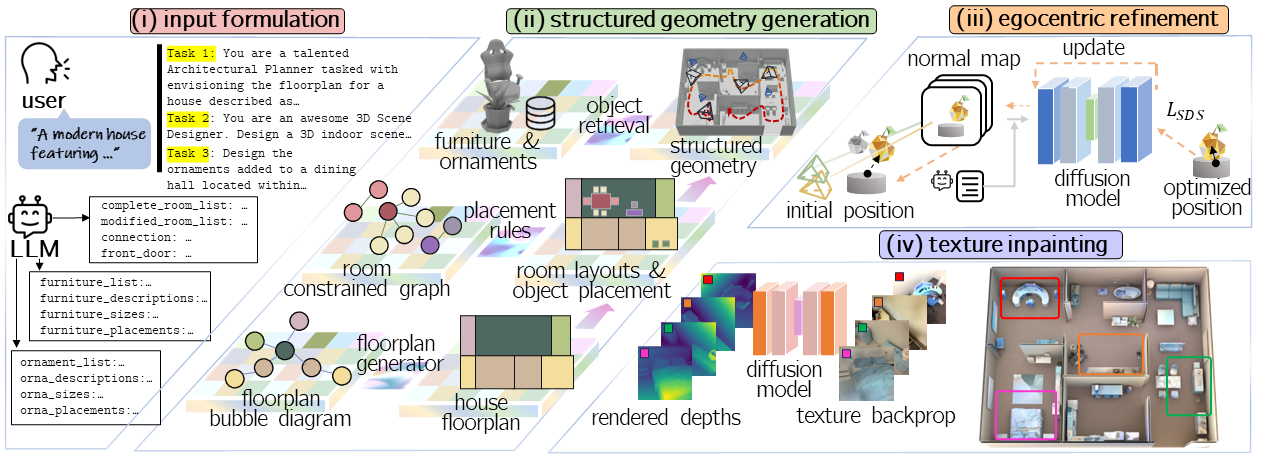

We introduce AnyHome, a framework that translates open-vocabulary descriptions, ranging from simple labels to elaborate paragraphs, into well-structured and textured 3D indoor scenes at a house-scale. Inspired by cognition theories, AnyHome employs an amodal structured representation to capture 3D spatial cues from textual narratives and then uses egocentric inpainting to enrich these scenes. To this end, we begin by using specially designed template prompts for Large Language Models (LLMs), which enable precise control over the textual input. We then utilize intermediate representations to maintain the spatial structure’s consistency, ensuring that the 3D scenes align closely with the textual description. Then, we apply a Score Distillation Sampling process to refine the placement of objects. Lastly, an egocentric inpainting process is incorporated to enhance the realism and appearance of the scenes. AnyHome stands out due to its hierarchical structured representation combined with the versatility of open-vocabulary text interpretation. This allows for extensive customization of indoor scenes at various levels of granularity. We demonstrate that AnyHome can reliably generate a range of diverse indoor scenes, characterized by their detailed spatial structures and textures, all corresponding to the free-form textual inputs.

Results: Open-Vocabulary Generation

We show open-vocabulary generation results, including bird-eye view(left), egocentric views(middle), and egocentric tour(right). AnyHome comprehends and extends user’s textual inputs, and produces structured scene with realistic texture. It can create a serene and culturally rich environment(“tea house”), synthesize unique house types(“cat cafe”), and render a more dramatic and stylized ambiance(“haunted house”).

Results: Open-Vocabulary Editing

Examples showcase the capability to modify room types, layouts, object appearances, and overall design through free-form user input. AnyHome also supports comprehensive style alterations and sequential edits, all made possible by its hierarchical structured geometric representation and robust text controllability.

Method

Taking a free-form textual input, our pipeline generates the house-scale scene by: (i) comprehending and elaborating on the user’s textual input through querying an LLM with templated prompts; (ii) converting textual descriptions into base geometry using structured intermediate representations; (iii) employing an SDS process with a differentiable renderer to refine object placements; and (iv) applying depth-conditioned texture inpainting for egocentric texture generation.

Citations

@inproceedings{fu2024anyhome,

title={Anyhome: Open-vocabulary generation of structured and textured 3d homes},

author={Fu, Rao and Wen, Zehao and Liu, Zichen and Sridhar, Srinath},

booktitle={European Conference on Computer Vision},

pages={52--70},

year={2024},

organization={Springer}

}

Acknowledgements

This research was supported by AFOSR grant FA9550-21-1-0214. The authors thank Dylan Hu, Selena Ling, Kai Wang and Daniel Ritchie

Contact

Rao Fu (rao_fu@brown.edu)